1. 데이터 로더(Data Loader)

머신러닝 및 딥러닝 모델을 훈련시키거나 테스트할 때 일반적으로 데이터는 모델에 배치(batch) 단위로 공급됩니다. 데이터 로더는 이러한 배치를 효과적으로 생성하여 데이터의 양이 많을 때 모델이 효율적으로 학습하도록 지원합니다.

2. 손글씨 인식 모델 만들기

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split# GPU로 변경

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(device) // cudadigits = load_digits()

x_data = digits['data']

y_data = digits['target']

print(x_data.shape) // (1797, 64)

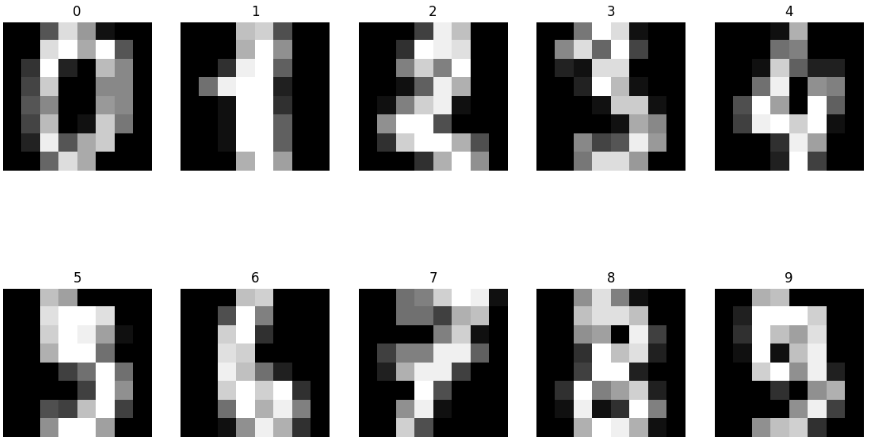

print(y_data.shape) // (1797,)fig, axes = plt.subplots(nrows=2, ncols=5, figsize =(14, 8))

for i, ax in enumerate(axes.flatten()):

ax.imshow(x_data[i].reshape((8,8)), cmap='gray')

ax.set_title(y_data[i])

ax.axis('off')

x_data = torch.FloatTensor(x_data)

y_data = torch.LongTensor(y_data)

print(x_data.shape) // torch.Size([1797, 64])

print(y_data.shape) // torch.Size([1797])

x_train, x_test, y_train, y_test = train_test_split(x_data, y_data, test_size =0.2, random_state = 2024)

print(x_train.shape, y_train.shape) // torch.Size([1437, 64]) torch.Size([1437])

print(x_test.shape, y_test.shape) // torch.Size([360, 64]) torch.Size([360])loader = torch.utils.data.DataLoader(

dataset = list(zip(x_train, y_train)),

batch_size = 64,

shuffle=True

)

# drop_last = False : 짜투리 안버림

imgs, labels = next(iter(loader))

# imgs # next를 해줬기 때문에 64개씩 출력된다.

fig, axes = plt.subplots(nrows=8, ncols=8, figsize=(14, 14))

for ax, img, label in zip(axes.flatten(), imgs, labels):

ax.imshow(img.reshape((8,8)), cmap='gray')

ax.set_title(str(label))

ax.axis('off')

model = nn.Sequential(

nn.Linear(64, 10)

)

optimizer = optim.Adam(model.parameters(), lr= 0.01)

epochs = 50

for epoch in range(epochs +1):

sum_losses = 0

sum_accs = 0

# 64개씩 들어감

for x_batch, y_batch in loader:

y_pred = model(x_batch)

loss = nn.CrossEntropyLoss()(y_pred, y_batch)

optimizer.zero_grad()

loss.backward()

optimizer.step()

sum_losses = sum_losses + loss

y_prob = nn.Softmax(1)(y_pred)

y_pred_index = torch.argmax(y_prob, axis=1)

acc = (y_batch == y_pred_index).float().sum() / len(y_batch) * 100

sum_accs = sum_accs + acc

# epoch 한번 끝날때마다 업데이트 된 loss와 accuracy 평균 보기

avg_loss = sum_losses / len(loader)

avg_acc = sum_accs / len(loader)

print(f'Epoch {epoch: 4d}/{epochs} Loss: {avg_loss: .6f} Accuracy: {avg_acc:.2f}')Epoch 0/50 Loss: 1.891644 Accuracy: 59.09

Epoch 1/50 Loss: 0.271366 Accuracy: 90.61

Epoch 2/50 Loss: 0.155282 Accuracy: 94.77

Epoch 3/50 Loss: 0.138113 Accuracy: 95.52

Epoch 4/50 Loss: 0.105262 Accuracy: 97.15

Epoch 5/50 Loss: 0.087942 Accuracy: 97.42

Epoch 6/50 Loss: 0.081044 Accuracy: 97.55

Epoch 7/50 Loss: 0.067027 Accuracy: 98.10

Epoch 8/50 Loss: 0.062933 Accuracy: 98.29

Epoch 9/50 Loss: 0.052343 Accuracy: 98.57

Epoch 10/50 Loss: 0.050577 Accuracy: 98.78

Epoch 11/50 Loss: 0.050791 Accuracy: 98.63

Epoch 12/50 Loss: 0.039593 Accuracy: 99.25

Epoch 13/50 Loss: 0.051212 Accuracy: 98.78

Epoch 14/50 Loss: 0.044485 Accuracy: 98.71

Epoch 15/50 Loss: 0.033195 Accuracy: 99.32

Epoch 16/50 Loss: 0.031275 Accuracy: 99.78

Epoch 17/50 Loss: 0.036495 Accuracy: 99.03

Epoch 18/50 Loss: 0.033133 Accuracy: 98.98

Epoch 19/50 Loss: 0.025209 Accuracy: 99.59

Epoch 20/50 Loss: 0.024205 Accuracy: 99.66

Epoch 21/50 Loss: 0.021365 Accuracy: 99.73

Epoch 22/50 Loss: 0.022471 Accuracy: 99.59

Epoch 23/50 Loss: 0.019897 Accuracy: 99.73

Epoch 24/50 Loss: 0.018282 Accuracy: 99.80

Epoch 25/50 Loss: 0.017660 Accuracy: 99.80

Epoch 26/50 Loss: 0.021324 Accuracy: 99.52

Epoch 27/50 Loss: 0.024314 Accuracy: 99.39

Epoch 28/50 Loss: 0.018680 Accuracy: 99.80

Epoch 29/50 Loss: 0.016977 Accuracy: 99.86

Epoch 30/50 Loss: 0.016509 Accuracy: 99.86

Epoch 31/50 Loss: 0.015076 Accuracy: 99.86

Epoch 32/50 Loss: 0.013349 Accuracy: 99.93

Epoch 33/50 Loss: 0.017213 Accuracy: 99.73

Epoch 34/50 Loss: 0.019616 Accuracy: 99.46

Epoch 35/50 Loss: 0.012428 Accuracy: 99.86

Epoch 36/50 Loss: 0.012883 Accuracy: 99.86

Epoch 37/50 Loss: 0.013028 Accuracy: 99.93

Epoch 38/50 Loss: 0.011940 Accuracy: 99.80

Epoch 39/50 Loss: 0.012129 Accuracy: 99.86

Epoch 40/50 Loss: 0.014371 Accuracy: 99.71

Epoch 41/50 Loss: 0.012114 Accuracy: 99.93

Epoch 42/50 Loss: 0.011818 Accuracy: 99.80

Epoch 43/50 Loss: 0.008474 Accuracy: 99.93

Epoch 44/50 Loss: 0.007661 Accuracy: 100.00

Epoch 45/50 Loss: 0.007813 Accuracy: 99.93

Epoch 46/50 Loss: 0.007223 Accuracy: 100.00

Epoch 47/50 Loss: 0.006277 Accuracy: 100.00

Epoch 48/50 Loss: 0.006476 Accuracy: 100.00

Epoch 49/50 Loss: 0.006501 Accuracy: 100.00

Epoch 50/50 Loss: 0.006197 Accuracy: 100.00

plt.imshow(x_test[10].reshape((8,8)), cmap = 'gray')

print(y_test[10])

// tensor(7)

y_pred = model(x_test)

y_pred[10]

tensor([ -8.5668, -0.4511, -11.3228, -3.0213, -2.3378, -4.9395, -11.4141,

12.5245, -1.9677, 5.0611], grad_fn=<SelectBackward0>)

# 각 클래스에 대한 값

y_prob = nn.Softmax(1)(y_pred)

y_prob[10]

tensor([6.9175e-10, 2.3150e-06, 4.3957e-11, 1.7715e-07, 3.5086e-07, 2.6015e-08,

4.0121e-11, 9.9942e-01, 5.0800e-07, 5.7340e-04],

grad_fn=<SelectBackward0>) # 각 클래스에 대한 확률이 포함된 텐서

for i in range(10):

print(f'숫자 {i}일 확률: {y_prob[10][i]:.2f}')

숫자 0일 확률: 0.00

숫자 1일 확률: 0.00

숫자 2일 확률: 0.00

숫자 3일 확률: 0.00

숫자 4일 확률: 0.00

숫자 5일 확률: 0.00

숫자 6일 확률: 0.00

숫자 7일 확률: 1.00

숫자 8일 확률: 0.00

숫자 9일 확률: 0.00y_pred_index = torch.argmax(y_prob, axis=1)

accuracy = (y_test == y_pred_index).float().sum() / len(y_test) * 100

print(f'테스트 정확도는 {accuracy: .2f}% 입니다!')

// 테스트 정확도는 96.11% 입니다!'AI' 카테고리의 다른 글

| 비선형 활성화 함수 (0) | 2024.01.10 |

|---|---|

| 딥러닝 (0) | 2024.01.09 |

| 파이토치로 구현한 논리회귀 (0) | 2024.01.08 |

| 기온에 따른 지면 온도 예측 (0) | 2024.01.08 |

| 파이토치로 구현한 선형회귀 (1) | 2024.01.08 |